Abstract

In the realm of household robotics, the Zero-Shot Object Navigation (ZSON) task empowers agents to adeptly traverse unfamiliar environments and locate objects from novel categories without prior explicit training. This paper introduces VoroNav, a novel semantic exploration framework that proposes the Reduced Voronoi Graph to extract exploratory paths and planning nodes from a semantic map constructed in real time. By harnessing topological and semantic information, VoroNav designs text-based descriptions of paths and images that are readily interpretable by a large language model (LLM). Our approach presents a synergy of path and farsight descriptions to represent the environmental context, enabling the LLM to apply commonsense reasoning to ascertain the optimal waypoints for navigation. Extensive evaluation on the HM3D and HSSD datasets validates that VoroNav surpasses existing ZSON benchmarks in both success rates and exploration efficiency (+2.8% Success and +3.7% SPL on HM3D, +2.6% Success and +3.8% SPL on HSSD). Additionally introduced metrics that evaluate obstacle avoidance proficiency and perceptual efficiency further corroborate the enhancements achieved by our method in ZSON planning.

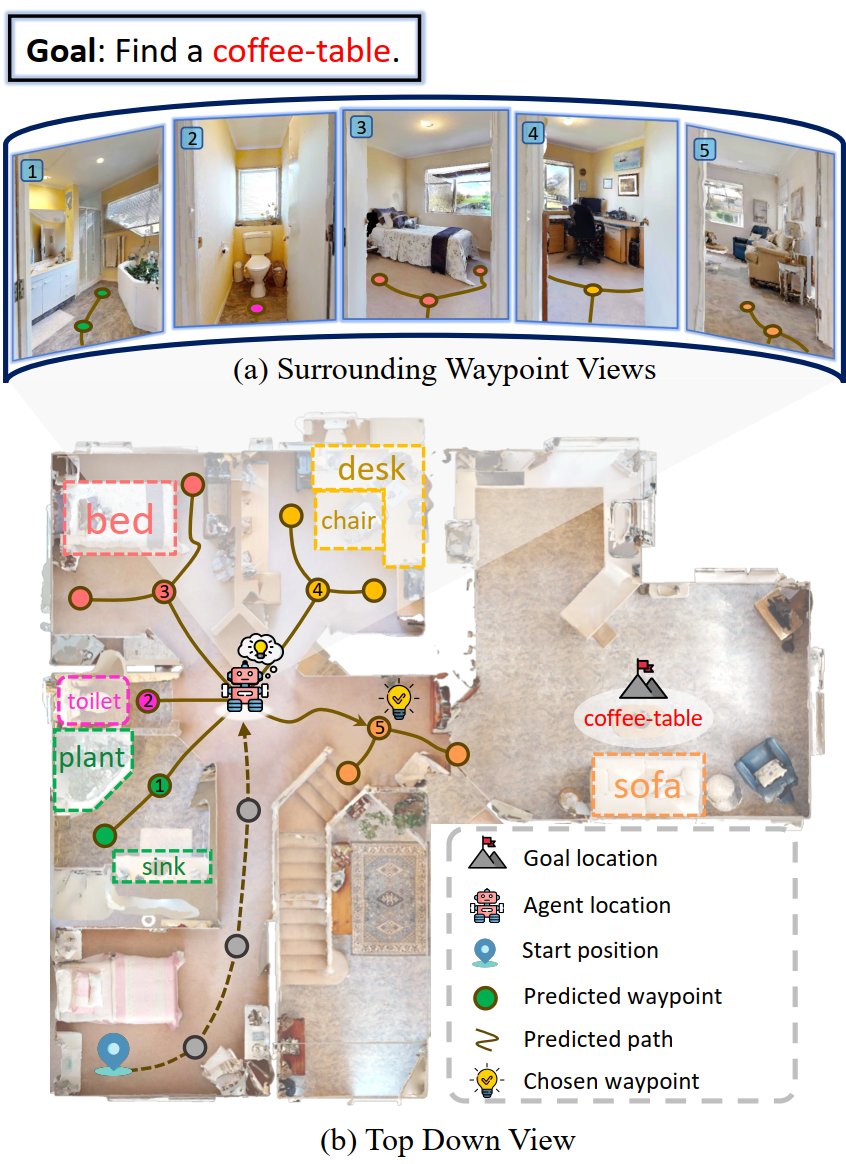

Navigation Overview

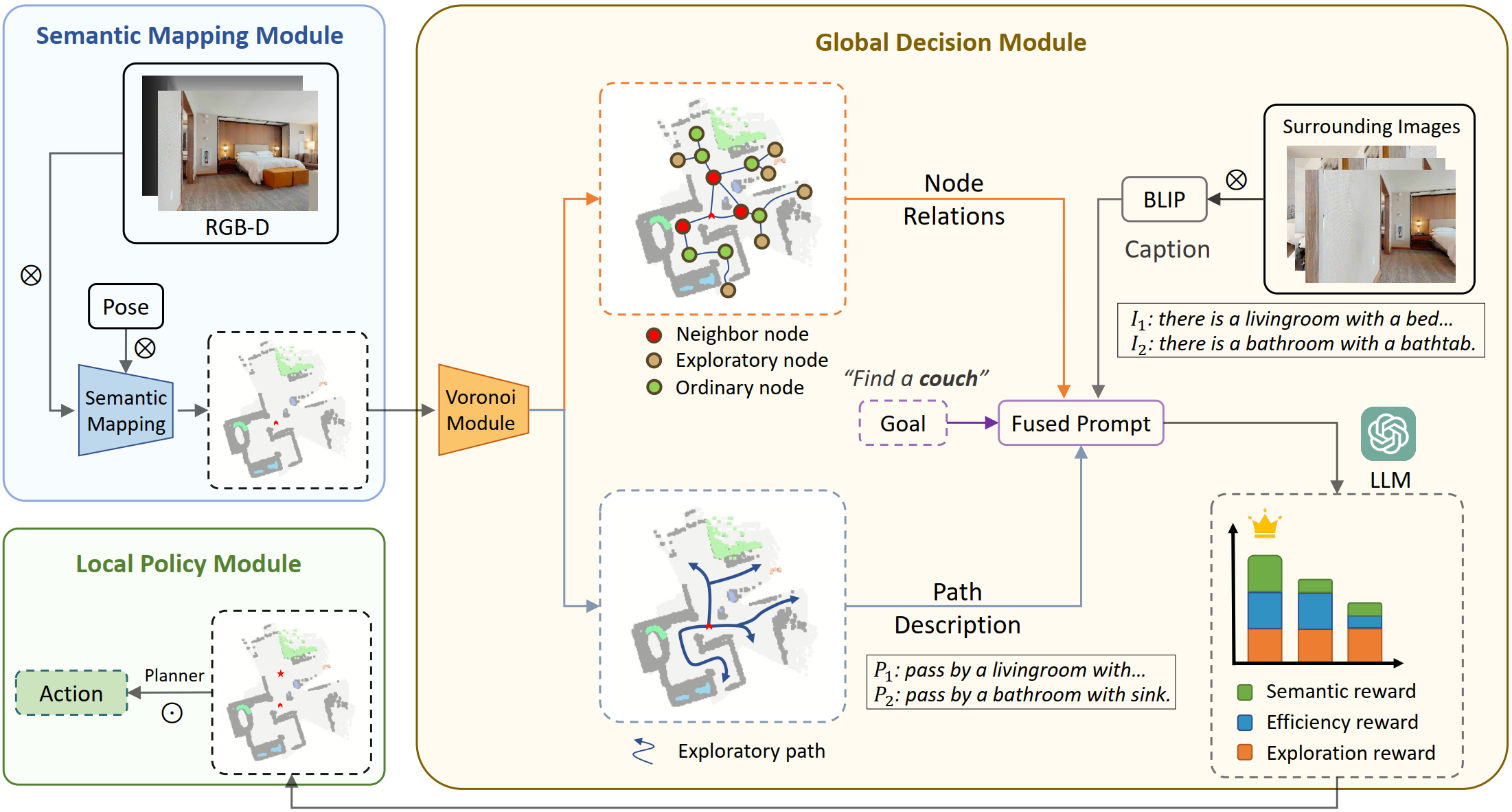

Model Framework

VoroNav framework comprises three modules: the Semantic Mapping Module, the Global Decision Module, and the Local Policy Module.

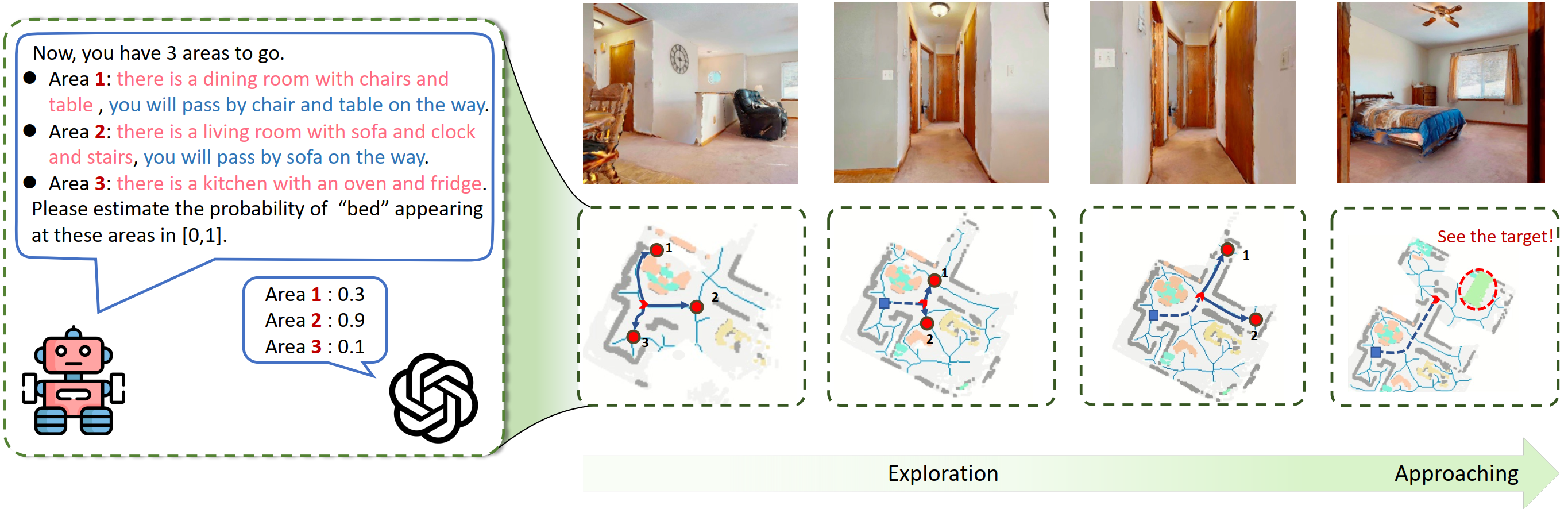

Simulation Experiments

Simulation Demos

The robot efficiently explores the unseen indoor environment with the guidance of LLM, and finally successfully finds the target (these demos are playing at 2x speed).

BibTeX

@article{wu2024voronav,

title={VoroNav: Voronoi-based Zero-shot Object Navigation with Large Language Model},

author={Wu, Pengying and Mu, Yao and Wu, Bingxian and Hou, Yi and Ma, Ji and Zhang, Shanghang and Liu, Chang},

journal={arXiv preprint arXiv:2401.02695},

year={2024}

}Acknowledgement

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.